In this article, we’ll be exploring strategies, techniques, and best practices to ensure our app stands out in terms of speed, efficiency, and user satisfaction.

Whether it’s a website or a mobile application, users have little patience for slow-loading content. A delay of just a few seconds can lead to frustrated users, increased bounce rates, and lost opportunities.

This is where app speed optimization comes into play – the art of enhancing the performance of your application to provide a seamless and lightning-fast user experience.

Let’s start from the server side.

Server Side Cache

Server-side caching involves the temporary storage of frequently accessed data on the server’s memory or storage.

When a user requests a particular piece of content, the server can quickly deliver the cached version instead of generating the content from scratch.

This results in reduced server load and significantly faster response times. There are many types of server-side cache. We will discuss the File cache here.

Read more about our Flutter app development services.

File Caching

File caching for REST API involves storing the responses of API requests as cached files on the server.

When a client makes a request, the server can quickly serve the cached response instead of re-executing the entire request and processing logic.

This reduces the load on the server and significantly improves the API’s response time.

Benefits of File Caching for REST API: Why It Matters

- Speedier Response Times: By delivering cached responses, the API can provide near-instantaneous replies to client requests, ensuring minimal wait times.

- Reduced Server Load: Caching helps alleviate server strain by minimizing the need for repetitive computations, database queries, or complex processing.

- Bandwidth Conservation: Cached responses reduce the volume of data transmitted between the server and clients, resulting in lower bandwidth usage.

- Enhanced Scalability: File caching can improve an API’s scalability by allowing the server to handle more requests without the need for additional resources.

- Stable Performance: Caching ensures consistent response times, even during traffic spikes or high-demand periods.

How to sync updated data with cache data.

ETag – An Etag is a string token that a server associates with a response to uniquely identify the state of the response.

If the response at a given URL changes, a new Etag will be generated. A comparison of them can determine whether two representations of a response are the same or not.

While requesting a response, the app sends the ETag in the request header field to the server. The server matches the Etag of the requested response.

If both values match the server sends back a 304 Not Modified status, without a body, which tells the app that the cached data is still valid to use.

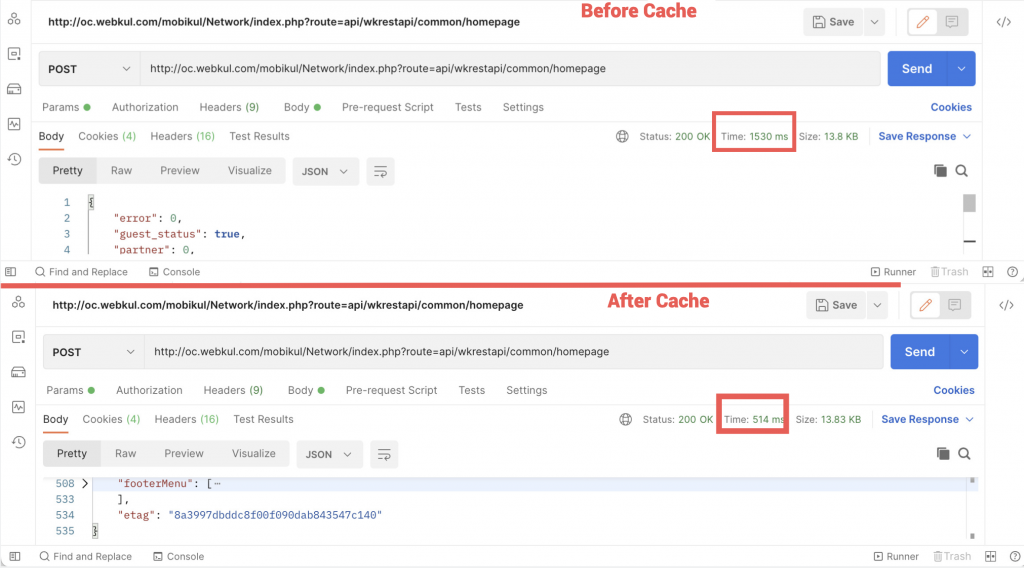

Above are the stats of our Home Page API’s response times to see how much difference we achieved with the caching.

Image Caching

In Image caching, images are stored in cache memory to improve an app’s performance.

Next time, the app first looks for the image in the cache. If the image is found in the cache, the application will load the image from the cache.

Why Image Caching is important?

- Improved Load Times: Caching images reduces the time to load, ensuring that visitors have a quick review of the product image or content.

- Enhanced User Experience: Faster load times contribute to a positive user experience

- Mobile Optimization: Helps in-app optimization As image loading time decreases app performance increases.

Payload

A payload refers to the data-carrying capacity of a packet over the network. The payload can be multiple or single.

Both payloads can be used for speed optimization, depending on the specific requirements of your application.

- Reduced Overhead: Sending data as a single payload can reduce the overhead associated with multiple requests or messages. This can lead to faster communication between components, especially in scenarios where frequent data exchanges are needed.

- Minimized Network Latency: In situations where network latency is a concern, a single payload can reduce the number of round-trips between the client and server, resulting in quicker data transfer.

- Batch Processing: When dealing with batch processing, aggregating data into a single payload can optimize the processing time, as the server can handle multiple records or operations in a single request.

- Parallel Processing: Multiple payloads can enable parallel processing of data, allowing different parts of a system to handle individual payloads concurrently. This can lead to improved throughput and faster data processing.

- Streaming and Real-Time Data: When dealing with real-time data or data streams, using multiple payloads can enable continuous data transmission and processing, leading to timely updates and responsiveness.

- Scalability: Distributing data across multiple payloads can facilitate load balancing and scalability, as different components can process payloads independently and in parallel.

- Partial Data Retrieval: When fetching data from a server, multiple payloads can be used to request only the necessary portions of data, reducing the amount of data transferred and improving response times.

App Side Cache

Implementing caching on the app side involves storing and managing frequently used data locally. This can significantly improve the app’s speed and responsiveness.

We can use any available database according to requirements. As we are our Opencart App is on flutter technology we used hive data to store API responses locally.

To know more about app-side caching using hive with example please check the below link.

API Caching Using Hive and Bloc

Prefetching Data Concept

Until now, By the above point and approach, we have already optimized our app speed to an extreme level. By using Prefeching cache we can optimize our app more.

What is Prefetching?

Prefetching means loading the data before it’s required in the background thread and storing it locally.

So that when we access the data we don’t have to wait to load data from the server. However, we can request updated data on a background thread.

For Example- In Our demos, we have loaded the product details data which are showing on the homepage.

Another example is Opencart Headless PWA (progressive web application).

As a benefit, we have our product detail screen a tap away. As soon as the user taps on the product tile product detail screen shows the data.

Conclusion

This is how we enhance our open cart app speed and performance to provide a seamless user experience. Please check the below video to check the difference.

Thanks for reading this Article. Feel free to share your ideas or any feedback.

Be the first to comment.