Machine Learning in Flutter Apps, Machine Learning is a way of making machines learn to grow and learn just like humans with the help of data and algorithms.

It is a sub-domain of Artificial Intelligence (AI).

Machines are not capable of taking decisions on their own, but with the help of Machine Learning, it is possible nowadays.

In Machine Learning, the machines learn and improve from their past analysis and data, they can make predictions without any external programming.

Looking out for some Flutter app development services

The most widely used domain of Machine Learning in Flutter apps is-

- Vision – It basically takes an image as input and deals with analyzing and interpreting those digital images. Eg. – Text Recognition.

- NLP (Natural Programming Processing) – NLP deals with analyzing and understanding human language. Eg. – Language Identification.

We will be working on integrating Machine Learning in Flutter apps with the help of the google_ml_kit plugin. Starting with the ML Integration, in order to pick the image, here we have used the image_picker plugin.

There are multiple categories supported by google_ml_kit like –

- Text Recognition

- Image Labeling

- Face Detection

- Pose Detection

- Selfie Segmentation

- Object Detection

Integration of Machine Learning in Flutter Apps

Let’s begin by adding dependencies to our pubspec.yaml file

|

1 2 |

google_ml_kit: ^0.14.0 image_picker: ^0.8.7+4 |

To check the updated version of these dependencies go to pub.dev

So, in order to start working on these detections, we will need to pick an Image either from Camera or Gallery using the image_picker plugin.

Here’s the code for it…

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

void pickImage(ImageSource source) async { var pickedImage = await ImagePicker() .pickImage(source: source, maxHeight: 300, maxWidth: 300); try { if (pickedImage != null) { imageFile = pickedImage; setState(() {}); } } catch (e) { result = "Error!!"; setState(() {}); } } |

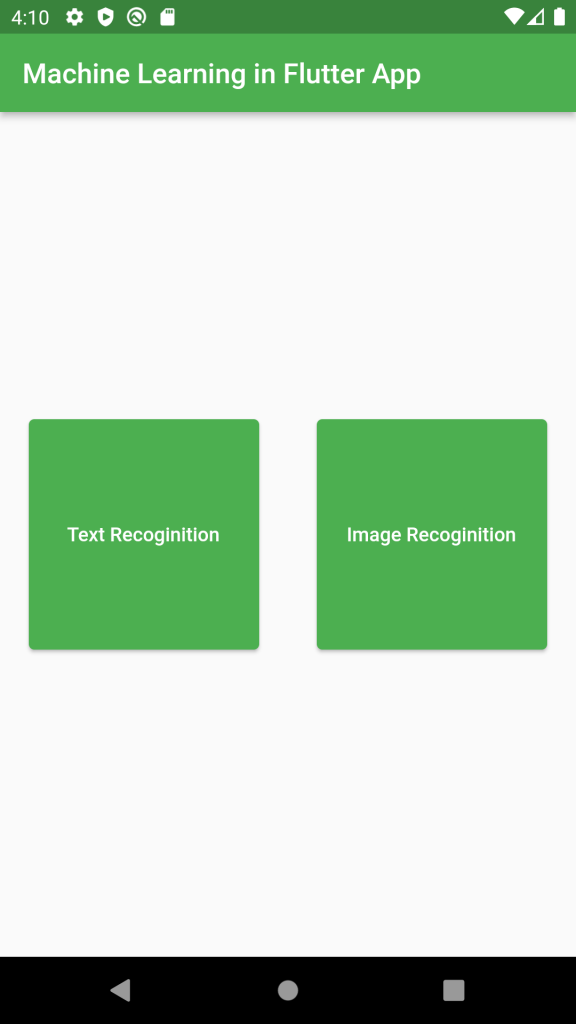

Reference Image –

Within this code, we have wrapped our code inside the try/catch block as it may be possible that the image is not picked correctly or not specified by the user.

Text Recognition

Text Recognition recognizes text in any Latin-based character set.

It helps to recognize the text in real-time on a wide range of devices.

It consists of three major elements-

- Block – It is a set of adjoining text lines within the image.

- Lines – It is a set of adjoining text elements within the same axis.

- Elements – It is a set of adjoining alphabetic characters that results in forming a complete word.

Here, we have created a dart file named lib/textRecognitionScreen.dart

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 |

import 'package:flutter/material.dart'; import 'package:google_ml_kit/google_ml_kit.dart'; import 'package:image_picker/image_picker.dart'; import 'dart:io'; class TextRecognitionScreen extends StatefulWidget { const TextRecognitionScreen({Key? key}) : super(key: key); @override State<TextRecognitionScreen> createState() => _TextRecognitionScreenState(); } class _TextRecognitionScreenState extends State<TextRecognitionScreen> { InputImage? inputImage; bool isScanning = false; XFile? imageFile; String result = ""; @override void initState() { super.initState(); } @override Widget build(BuildContext context) { return Scaffold( appBar: AppBar( backgroundColor: Colors.green, title: const Text('Text Recognition in Flutter App'), ), body: Center( child: Column( crossAxisAlignment: CrossAxisAlignment.center, mainAxisAlignment: MainAxisAlignment.center, children: [ imageFile == null ? Container( decoration: BoxDecoration( border: Border.all(color: Colors.grey, width: 2.0), borderRadius: BorderRadius.circular(8.0)), width: 200, height: 200, child: Image.asset('assets/placeholder.png'), ) : Image.file(File(imageFile!.path)), const SizedBox( height: 40.0, ), Text( result, style: const TextStyle(fontSize: 20.0), ), const SizedBox( height: 40.0, ), SizedBox( width: MediaQuery.of(context).size.width / 1.5, height: 50.0, child: Padding( padding: const EdgeInsets.symmetric(horizontal: 8.0), child: ElevatedButton( onPressed: () { showImageSourceDialog(context); }, child: const Text("Start")), ), ) ], ), )); } void pickImage(ImageSource source) async { var pickedImage = await ImagePicker() .pickImage(source: source, maxHeight: 300, maxWidth: 300); Navigator.of(context).pop(); try { if (pickedImage != null) { imageFile = pickedImage; setState(() {}); getTextFromImage(pickedImage); } } catch (e) { isScanning = false; imageFile = null; result = "Error!!"; setState(() {}); } } void getTextFromImage(XFile image) async { final inputImage = InputImage.fromFilePath(image.path); final textDetector = GoogleMlKit.vision.textRecognizer(script: TextRecognitionScript.latin); RecognizedText recognisedText = await textDetector.processImage(inputImage); result = recognisedText.text; isScanning = false; await textDetector.close(); setState(() {}); } showImageSourceDialog(BuildContext context) { showModalBottomSheet( context: context, builder: (BuildContext context) { return Column( crossAxisAlignment: CrossAxisAlignment.start, mainAxisSize: MainAxisSize.min, children: [ const Padding( padding: EdgeInsets.all(8.0), child: Text( "Select Image From", style: TextStyle(fontSize: 18.0, fontWeight: FontWeight.bold), ), ), GestureDetector( onTap: () { pickImage(ImageSource.gallery); }, child: const ListTile( leading: Icon(Icons.photo), title: Text('Gallery'), ), ), GestureDetector( onTap: () { pickImage(ImageSource.camera); }, child: const ListTile( leading: Icon(Icons.camera), title: Text('Camera'), ), ), ], ); }); } } |

Here’s the Output-

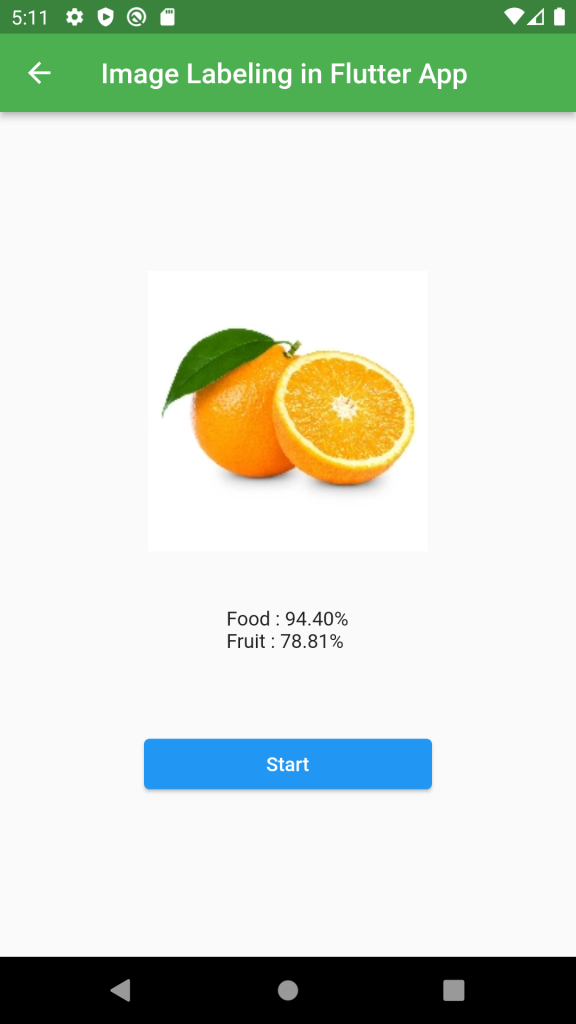

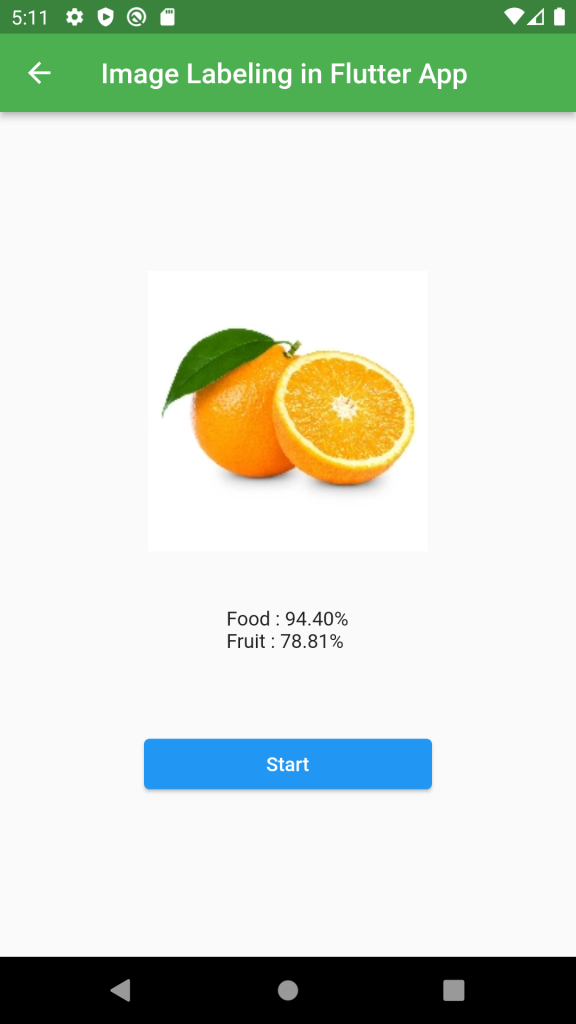

Image Labeling

Image Labeling is a technique in which the MLKit API extracts the information from an image belonging to a group. The default image labeling model can identify general objects, places, animal species, products, and many more elements.

Here, we have created a dart file named lib/imageLabelingScreen.dart

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 |

import 'package:flutter/material.dart'; import 'package:google_ml_kit/google_ml_kit.dart'; import 'package:image_picker/image_picker.dart'; import 'dart:io'; class ImageLabelingScreen extends StatefulWidget { const ImageLabelingScreen({Key? key}) : super(key: key); @override State<ImageLabelingScreen> createState() => _ImageLabelingScreenState(); } class _ImageLabelingScreenState extends State<ImageLabelingScreen> { InputImage? inputImage; bool isScanning = false; XFile? imageFile; String result = ""; @override void initState() { super.initState(); } @override Widget build(BuildContext context) { return Scaffold( appBar: AppBar( backgroundColor: Colors.green, title: const Text('Image Labeling in Flutter App'), ), body: Center( child: Column( mainAxisAlignment: MainAxisAlignment.center, crossAxisAlignment: CrossAxisAlignment.center, children: [ imageFile == null ? Container( decoration: BoxDecoration( border: Border.all(color: Colors.grey, width: 2.0), borderRadius: BorderRadius.circular(8.0)), width: 200, height: 200, child: Image.asset('assets/placeholder.png'), ) : Image.file(File(imageFile!.path)), const SizedBox( height: 40.0, ), Text(result), const SizedBox( height: 40.0, ), SizedBox( width: MediaQuery.of(context).size.width / 2, child: ElevatedButton( onPressed: () { showImageSourceDialog(context); }, child: Text("Start"))) ], ), )); } void pickImage(ImageSource source) async { var pickedImage = await ImagePicker() .pickImage(source: source, maxHeight: 200, maxWidth: 200); Navigator.of(context).pop(); try { if (pickedImage != null) { imageFile = pickedImage; setState(() {}); processImage(pickedImage); } } catch (e) { isScanning = false; imageFile = null; result = "Error!!"; setState(() {}); debugPrint("Exception $e"); } } Future<void> processImage(XFile image) async { final inputImage = InputImage.fromFilePath(image.path); ImageLabeler imageLabeler = ImageLabeler(options: ImageLabelerOptions(confidenceThreshold: 0.75)); List<ImageLabel> labels = await imageLabeler.processImage(inputImage); StringBuffer sb = StringBuffer(); for (ImageLabel imgLabel in labels) { String lblText = imgLabel.label; double confidence = imgLabel.confidence; sb.write(lblText); sb.write(" : "); sb.write((confidence * 100).toStringAsFixed(2)); sb.write("%\n"); } imageLabeler.close(); result = sb.toString(); isScanning = false; setState(() {}); } showImageSourceDialog(BuildContext context) { showModalBottomSheet( context: context, builder: (BuildContext context) { return Column( crossAxisAlignment: CrossAxisAlignment.start, mainAxisSize: MainAxisSize.min, children: [ const Padding( padding: EdgeInsets.all(8.0), child: Text( "Select Image From", style: TextStyle(fontSize: 18.0, fontWeight: FontWeight.bold), ), ), GestureDetector( onTap: () { pickImage(ImageSource.gallery); }, child: const ListTile( leading: Icon(Icons.photo), title: Text('Gallery'), ), ), GestureDetector( onTap: () { pickImage(ImageSource.camera); }, child: const ListTile( leading: Icon(Icons.camera), title: Text('Camera'), ), ), ], ); }); } } |

Within this code block, we have also specified the Confidence.

The Confidence Score indicates how sure the NLP service learning model is that the respective intent was correctly assigned

Here’s the Output-

Conclusion

In this blog, we have discussed how to use Machine Learning in Flutter Apps.

I hope it will help you understand.

Read more interesting Flutter Blogs by Mobikul.

Thanks for reading!!

Be the first to comment.